StrongMinds should not be a top-rated charity (yet)

GWWC lists StrongMinds as a “top-rated” charity. Their reason for doing so is because Founders Pledge has determined they are cost-effective in their report into mental health.

I could say here, “and that report was written in 2019 - either they should update the report or remove the top rating” and we could all go home. In fact, most of what I’m about to say does consist of “the data really isn’t that clear yet”.

I think the strongest statement I can make (which I doubt StrongMinds would disagree with) is:

“StrongMinds have made limited effort to be quantitative in their self-evaluation, haven’t continued monitoring impact after intervention, haven’t done the research they once claimed they would. They have not been vetted sufficiently to be considered a top charity, and only one independent group has done the work to look into them.”

My key issues are:

Survey data is notoriously noisy and the data here seems to be especially so

There are reasons to be especially doubtful about the accuracy of the survey data (StrongMinds have twice updated their level of uncertainty in their numbers due to SDB)

One of the main models is (to my eyes) off by a factor of ~2 based on an unrealistic assumption about depression (medium confidence)

StrongMinds haven’t continued to publish new data since their trials very early on

StrongMinds seem to be somewhat deceptive about how they market themselves as “effective” (and EA are playing into that by holding them in such high esteem without scrutiny)

What’s going on with the PHQ-9 scores?

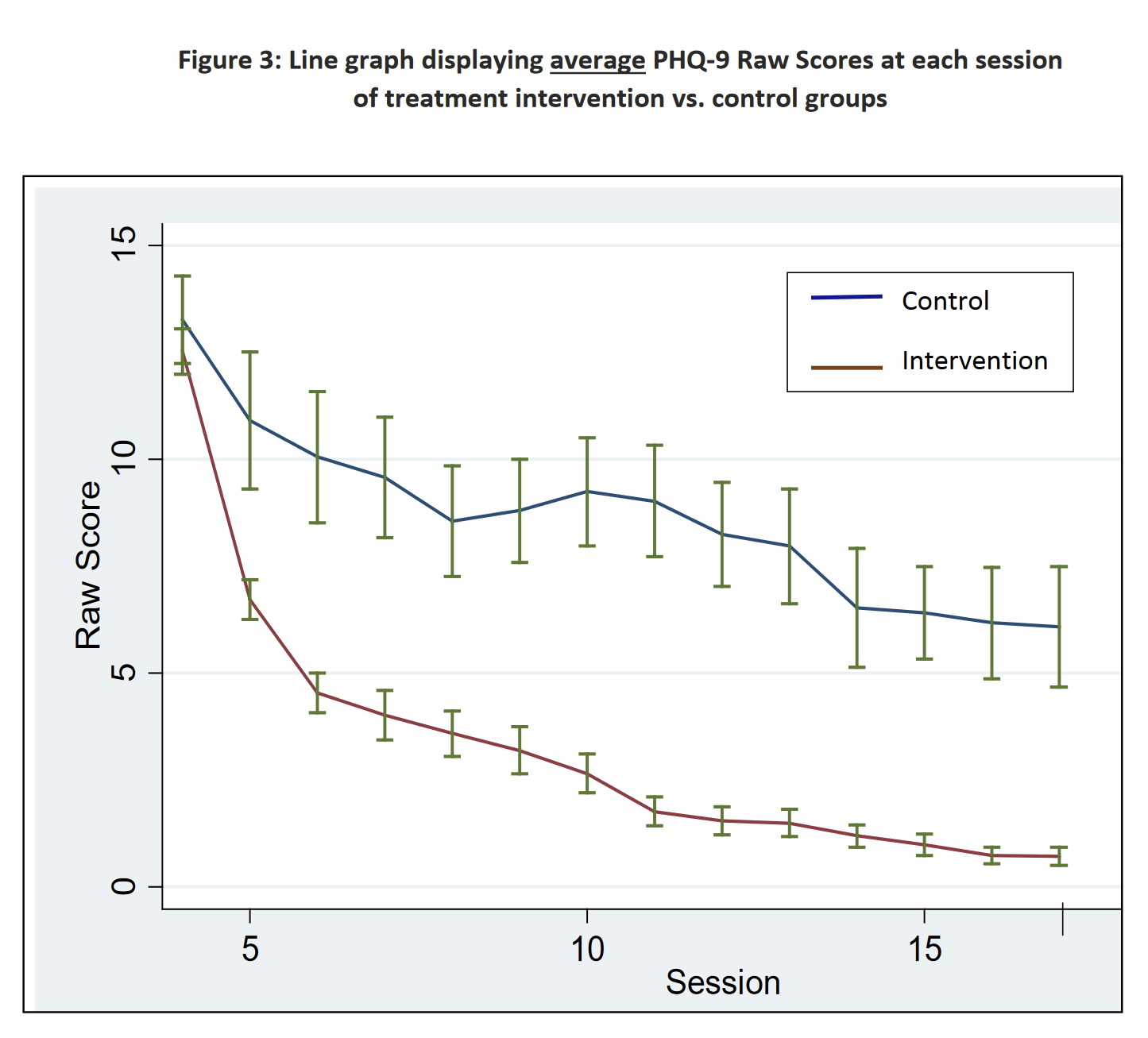

In their last four quarterly reports, StrongMinds have reported PHQ-9 reductions of: -13, -13, -13, -13. In their Phase II report, raw scores dropped by a similar amount:

However, their Phase II analysis reports (emphasis theirs):

As evidenced in Table 5, members in the treatment intervention group, on average, had a 4.5 point reduction in their total PHQ-9 Raw Score over the intervention period, as compared to the control populations. Further, there is also a significant visit effect when controlling for group membership. The PHQ-9 Raw Score decreased on average by 0.86 points for a participant for every two groups she attended. Both of these findings are statistically significant.

Founders Pledge’s cost-effectivenes model uses the 4.5 reduction number in their model. (And further reduces this for reasons we’ll get into later).

Based on Phase I and II surveys, it seems to me that a much more cost-effective intervention would be to go around surveying people. I’m not exactly sure what’s going on with the Phase I / Phase II data, but the best I can tell is in Phase I we had a ~7.5 vs ~5.1 PHQ-9 reduction from “being surveyed” vs “being part of the group” and in Phase II we had ~5.1 vs ~4.5 PHQ-9 reduction from “being surveyed” vs “being part of the group”. For what it’s worth, I don’t believe this is likely the case, I think it’s just a strong sign that the survey mechanism being used is inadequate to determine what is going on.

There are a number of potential reasons we might expect to see such large improvements in the mental health of the control group (as well as the treatment group).

Mean-reversion - StrongMinds happens to sample people at a low ebb and so the progression of time leads their mental health to improve of its own accord

“People in targeted communities often incorrectly believe that StrongMinds will provide them with cash or material goods and may therefore provide misleading responses when being diagnosed.” Potential participants fake their initial scores in order to get into the program (either because they (mistakenly) think there is some material benefit to being in the program or because they think it makes them more likely to get into a program they think would have value for them.

What’s going on with the ‘social-desirability bias’?

Both the Phase I and Phase II trials discovered that 97% and 99% of their patients were “depression-free” after the trial. They realised that these numbers were inaccurate during their Phase II trial. They decided on the basis of this, to reduce their numbers from 99% in Phase II to 92% on the basis of the results two weeks prior to the end.

In their follow-up study of Phases I and II, they then say:

While both the Phase 1 and 2 patients had 95% depression-free rates at the completion of formal sessions, our Impact Evaluation reports and subsequent experience has helped us to understand that those rates were somewhat inflated by social desirability bias, roughly by a factor of approximately ten percentage points. This was due to the fact that their Mental Health Facilitator administered the PHQ-9 at the conclusion of therapy. StrongMinds now uses external data collectors to conduct the post-treatment evaluations. Thus, for effective purposes, StrongMinds believes the actual depression-free rates for Phase 1 and 2 to be more in the range of 85%.

I would agree with StrongMinds that they still had social-desirability bias in their Phase I and II reports, although it’s not clear to me they have fully removed it now. This also relates to my earlier point about how much improvement we see in the control group. If pre-treatment are showing too high levels of depression and the post-treatment group is too low how confident should we be in the magnitude of these effects?

How bad is depression?

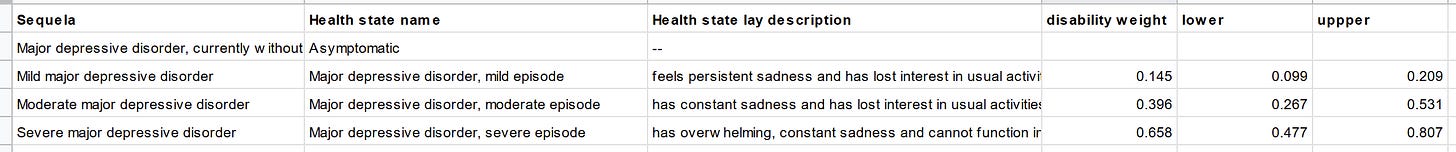

Severe depression has a DALY weighting of 0.66.

(Founders Pledge report, via Global Burden of Disease Disability Weights)

The key section of the Disability Weights table reads as follows:

My understanding (based on the lay descriptions, IANAD etc) is that “severe depression” is not quite the right way to describe the thing which has a DALY weighting of 0.66. “severe depression during an episode has a DALY weighting of 0.66” would be more accurate.

Assuming linear decline in severity on the PHQ-9 scale.

(Founders Pledge model)

Furthermore whilst the disability weights are linear between “mild”, “moderate” and “severe” the threshold for “mild” in PHQ-9 terms is not ~1/3 of the way up the scale. Therefore there is a much smaller change in disability weight for going 12 points from 12 - 0 than for 24-12. (One takes you from ~mild to asymptomatic ~.15 and one takes you from “severe episode” to “mild episode” ~0.51 which is a much larger change).

This change would roughly halve the effectiveness of the intervention, using the Founders Pledge model.

Lack of data

My biggest gripe with StrongMinds is they haven’t continued to provide follow-up analysis for any of their cohorts (aside from Phase I and II) despite saying they would in their 2017 report:

Looking forward, StrongMinds will continue to strengthen our evaluation efforts and will continue to follow up with patients at 6 or 12 month intervals. We also remain committed to implementing a much more rigorous study, in the form of an externally-led, longitudinal randomized control trial, in the coming years.

As far as I can tell, based on their conversation with GiveWell:

StrongMinds has decided not pursue a randomized controlled trial (RCT) of its program in the short term, due to:

High costs – Global funding for mental health interventions is highly limited, and StrongMinds estimates that a sufficiently large RCT of its program would cost $750,000 to $1 million.

Sufficient existing evidence – An RCT conducted in 2002 in Uganda found that weekly IPT-G significantly reduced depression among participants in the treatment group. Additionally, in October 2018, StrongMinds initiated a study of its program in Uganda with 200 control group participants (to be compared with program beneficiaries)—which has demonstrated strong program impact. The study is scheduled to conclude in October 2019.

Sufficient credibility of intervention and organization – In 2017, WHO formally recommended IPT-G as first line treatment for depression in low- and middle-income countries. Furthermore, the woman responsible for developing IPT-G and the woman who conducted the 2002 RCT on IPT-G both serve as mental health advisors on StrongMinds' advisory committee.

I don’t agree with any of the bullet points. (Aside from the first, although I think there should be ways to publish more data within the context of their current data).

On the bright side(!) as far as I can tell, we should be seeing new data soon. StrongMinds and Berk Ozler should have finished collecting their data for a larger RCT on StrongMinds. It’s a shame it’s not a direct comparison between cash transfers and IPT-G, (the arms are: IPT-G, IPT-G + cash transfers, no-intervention) but it will still be very valuable data for evaulating them.

Misleading?

This implies Charity Navigator thinks they are one of the world’s most effective charities. But in fact Charity Navigator haven’t evaluated them for “Impact & Results”.

WHO: There’s no external validation here (afaict). They just use StrongMinds own numbers and talk around the charity a bit.

I’m going to leave aside discussing HLI here. Whilst I think they have some of the deepest analysis of StrongMinds, I am still confused by some of their methodology, it’s not clear to me what their relationship to StrongMinds is. I plan on going into more detail there in future posts. The key thing to understand about the HLI methodology is that follows the same structure as the Founders Pledge analysis and so all the problems I mention above regarding data apply just as much to them as FP.

The “Inciting Alturism” profile, well, read it for yourself.

Founders Pledge is the only independent report I've found - and is discussed throughout this article.

GiveWell Staff Members personal donations:

I plan to give 5% of my total giving to StrongMinds, an organization focused on treating depression in Africa. I have not vetted this organization anywhere nearly as closely as GiveWell’s top charities have been vetted, though I understand that a number of people in the effective altruism community have a positive view of StrongMinds within the cause area of mental health (though I don’t have any reason to think it is more cost-effective than GiveWell’s top charities). Intuitively, I believe mental health is an important cause area for donors to consider, and although we do not have GiveWell recommendations in this space, I would like to learn more about this area by making a relatively small donation to an organization that focuses on it.

This is not external validation.

The EA Forum post is also another HLI piece.

I don’t have access to the Stanford piece, it’s paywalled.

Conclusion

What I would like to happen is:

Founders Pledge update or withdraw their recommendation of StrongMinds

GWWC remove StrongMinds as a top charity

Ozler's study comes out saying it's super effective

Everyone reinstates StrongMinds as a top charity, including some evaluators who haven't done so thus far